AI model accelerates design process

A new machine learning model can save engineers time and computation resources by using AI to quickly and accurately predict simulation results.

One of the primary challenges of the engineering design process is minimizing the time between iterations. Often, an engineer must perform a costly virtual analysis and evaluate the results before updating the design and repeating the process. Lengthy computing times are a bottleneck in the design pipeline, especially during the early stages, when high-fidelity simulations and analyses can waste valuable time and resources. To address this issue, data-driven machine learning surrogate models have emerged to reduce time between design iterations.

“Simulations inform how you can redesign the part or make adjustments so that you don't have to waste time or material building something that's going to just fail,” said Kevin Ferguson, Ph.D. student in mechanical engineering.

Source: College of Engineering

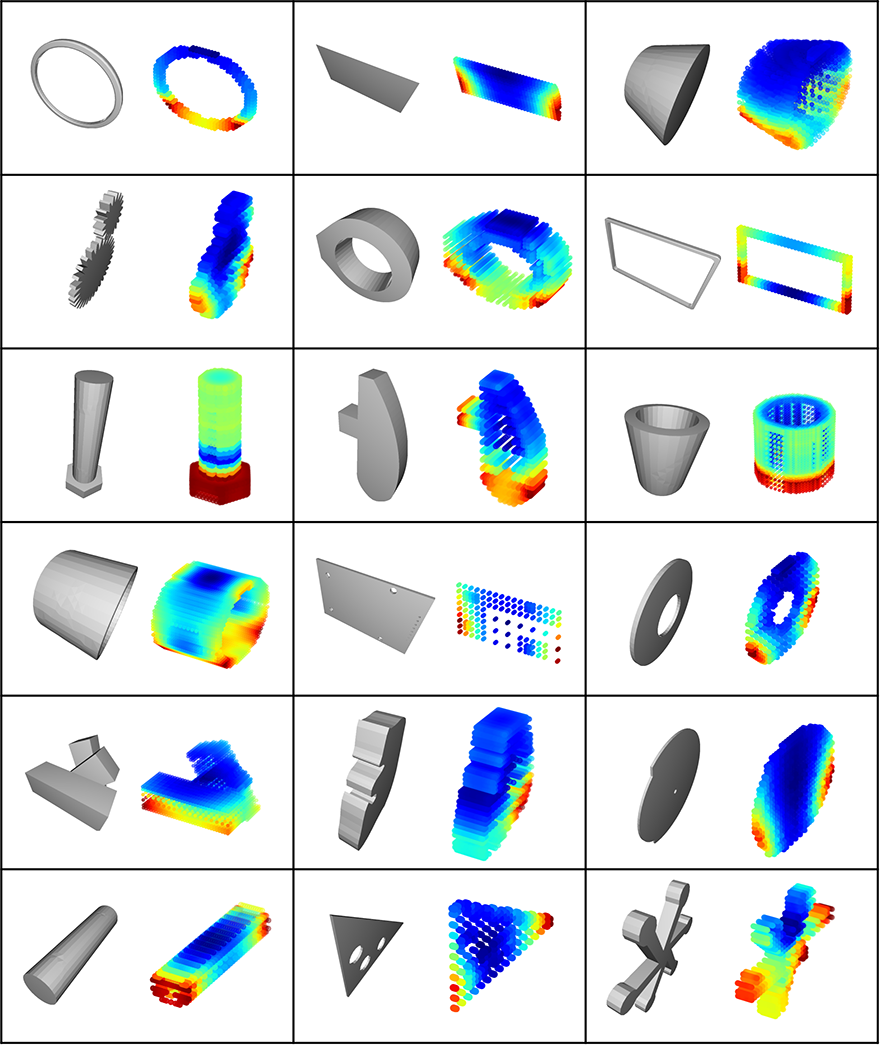

TAG U-NET predicts how parts will react to stress. Input CAD models are displayed on the left, with output predictions on the right.

Ferguson and fellow researchers at Carnegie Mellon University have developed a topology-agnostic graph U-Net (TAG U-NET) graph convolutional network that can be trained to input any mesh or graph structure and output a prediction of how that design will react to different stressors, including if and how it will distort.

“The model we developed can be used in any context where you want to predict a physical field throughout a 3D shape,” said Levent Burak Kara, a TAG U-NET researcher and professor of mechanical engineering. “In our case, the primary application was speeding up additive manufacturing simulations. A simulation can take hours or even days to complete, but a data-driven model like this can give you an estimate instantly. It's exciting to think about the impact this could have for engineers.”

A simulation can take hours or even days to complete, but a data-driven model like this can give you an estimate instantly.

Burak Kara, Professor, Mechanical Engineering

TAG U-NET was created by simulating how thousands of different shapes react to stressors like temperature and pressure, then using those results to train the machine learning model.

“Instead of actually doing the computation of running the simulated build process, you train a machine learning model to learn from a lot of previously run simulations for this particular problem,” said Ferguson. “If you have enough examples, then the machine learning model is going to be able to identify certain characteristics that might be similar to past shapes it’s constructed in previous simulations, and it’ll know what kinds of outputs you’re going to get.”

The dataset covers a wide range of shapes, with subtle to drastic variation. This scale and variety allows the model to achieve more than 85 percent accuracy when predicting simulation results. After the initial training process, TAG U-NET can make predictions for a part in less than one second. If used iteratively, TAG U-NET could save significant amounts of time and resources compared to running full simulations throughout the design process.

Source: College of Engineering

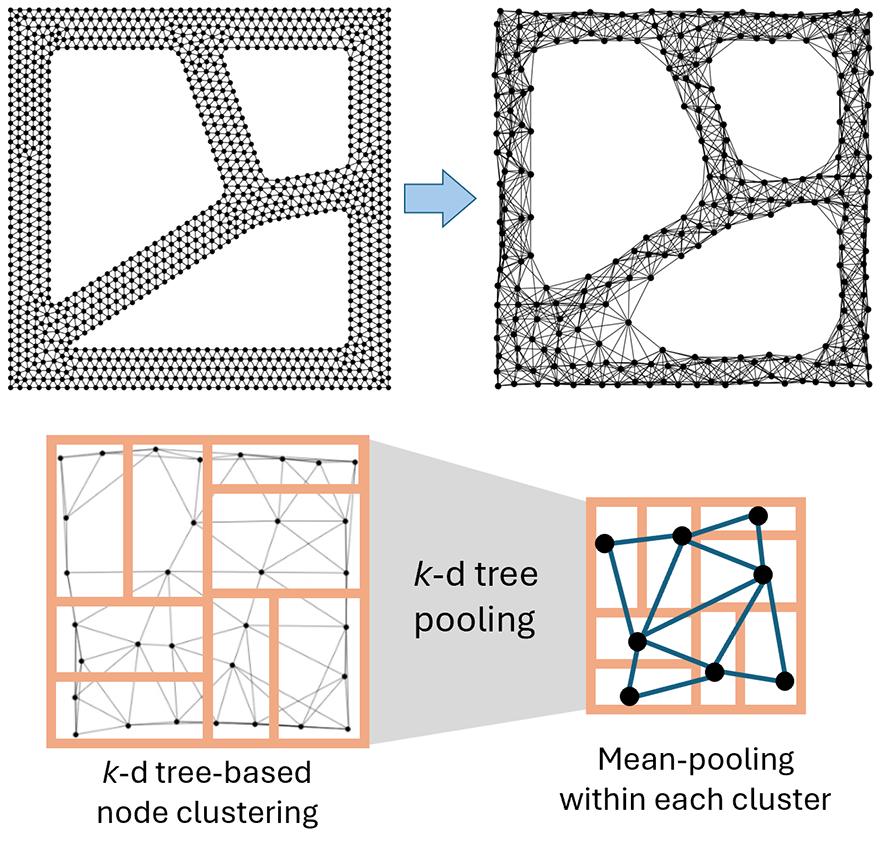

k-d tree pooling, demonstrated on a 2D mesh

TAG U-NET also uses k-d tree pooling, a mesh-coarsening method that reduces redundant shape information while preserving important features like node density.

Future work seeks to expand the types of materials that TAG U-NET can simulate and predict, and to assess how model size impacts performance to determine if increasing capacity yields significant improvement.

The researchers include Ferguson, Kara, Yu-hsuan Chen, Yiming Chen, Andrew Gillman, and James Hardin.