Multimodal machine learning model increases accuracy

Researchers in the department of mechanical engineering have developed a novel machine learning model combining graph neural networks with transformer-based language models to predict adsorption energy of catalyst systems.

Identifying optimal catalyst materials for specific reactions is crucial to advance energy storage technologies and sustainable chemical processes. To screen catalysts, scientists must understand systems’ adsorption energy, something that machine learning (ML) models, particularly graph neural networks (GNNs), have been successful at predicting.

However, GNNs have high inductive bias and require atomic structures. While that method can accurately encode the structure, it leaves a gap in utilizing experimentally observable features.

Building on past research that demonstrated a transformer-based language model’s ability to predict adsorption energy solely relying on human-readable text without preprocessing, researchers in Carnegie Mellon University’s Department of Mechanical Engineering developed a methodology to enhance the model by using multimodal learning.

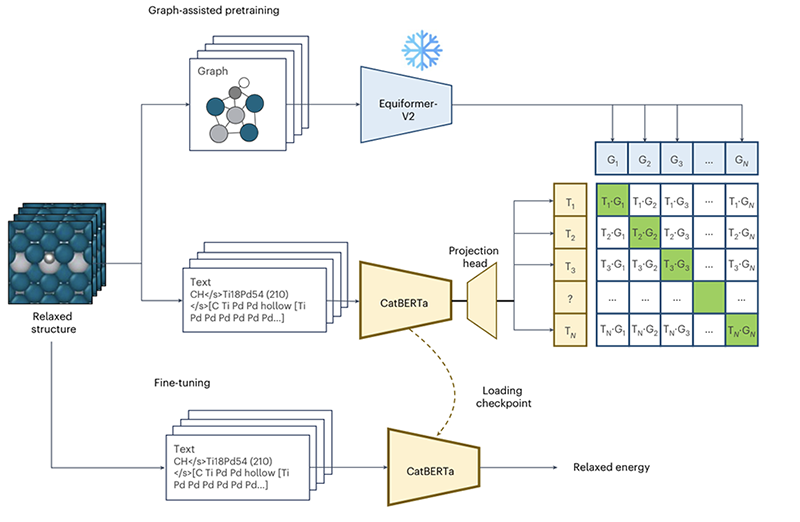

The training process consists of two steps: graph-assisted pretraining and energy prediction fine-tuning.

“When it comes to chemical data, by integrating different modalities, we can construct a comprehensive view. Inspired by this, we used multimodal learning to improve the performance of the predictive language model,” explained Janghoon Ock, Ph.D. candidate in Amir Barati Farimani’s lab.

Their approach facilitates connections between different model setups and enhances the language models’ ability to complete prediction tasks without task-specific labels.

The self-supervised process, called graph-assisted pretraining, reduces the mean absolute error of energy prediction for adsorption configurations by 7.4-9.8%.

Published in Nature Machine Intelligence, the methodology also incorporates a generative language model into the framework to bypass the required structure information. With this, the researchers enable the framework to predict the initial energy estimates without relying on atomic coordinates.

My ultimate goal is to build accessible and interactive methodologies that non-computational scientists can use.

Janghoon Ock, Ph.D. candidate, Chemical Engineering

“My ultimate goal is to build accessible and interactive methodologies that non-computational scientists can use,” said Ock. “LLM can be a key to achieve that accessibility and interactiveness. While it’s not the case right now, we are moving in the right direction.”

“Being able to produce energy estimates with just chemical symbols and surface orientations is a leap forward for accessible ML models,” emphasized Barati Farimani, associate professor of mechanical engineering.

The team aims to develop a more comprehensive language-based platform for catalyst design in the future by incorporating additional functional tools, and equipping the platform with reasoning and planning capabilities in an agent-like framework.