Emotion detection system puts a smile on their face

Silicon Valley students use AI to help job seekers improve performance and build confidence.

When Haoguang Cai and Khem Agarwal decided they wanted to work on a project to present at the annual IEEE Rising Stars conference, they knew they wanted to build something that would be of interest and use to the Institute for Electrical and Electronics Engineers audience of students and young technical professionals.

The Silicon Valley graduate students, who care about making AI that benefits people, came up with an idea that achieved both goals. They knew that IEEE Rising Star attendees are focused on career opportunities so they set to work devising a system that could help job seekers improve their performance and become more confident in job interviews.

Cai and Agarwal also knew that many of their fellow students practiced interviewing with friends or colleagues. But when mock interview options were exhausted or not available, they still wanted more practice and helpful feedback.

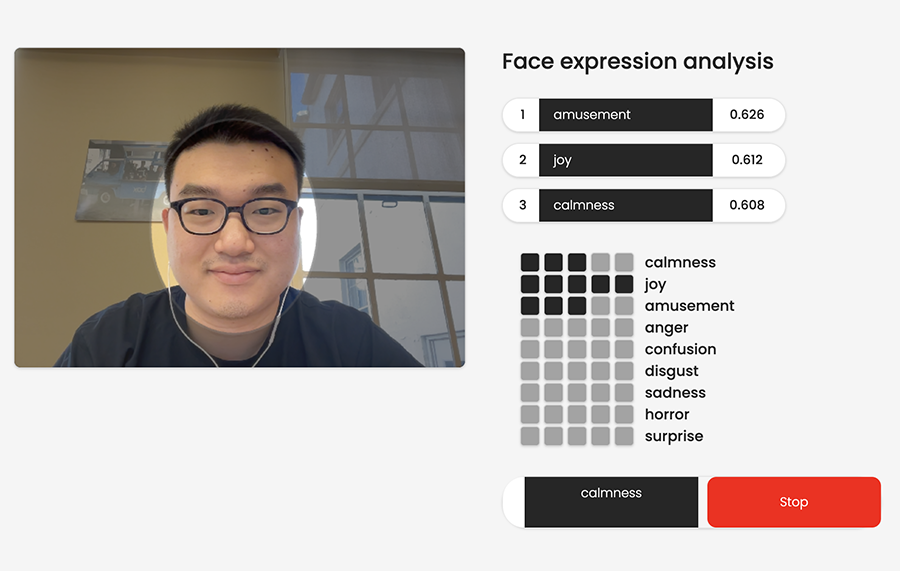

Face expression analysis that users can view in real time while answering interview questions indicate the emotion they are conveying.

The system that the pair developed and later demonstrated at the Las Vegas conference analyzes a user’s facial and speech expression to detect what emotions they are conveying and tracks their gaze or eye motion to detect where they are focusing their attention. Feedback in both real time and after the interview session gives users insight as to how they are likely being perceived during an interview.

“When I showed the live demo on stage, everyone wanted to try it. They really saw its potential and lined up after the workshop to try it and learn how we did it,” said Cai.

When I showed the live demo on stage, everyone wanted to try it. They really saw its potential and lined up after the workshop to try it and learn how we did it.

Haoguang Cai, MSSM student, SV - III

The project originated in their Software Engineering Management class. Their instructor, Catherine Fang, was scheduled to speak at the January 2024 conference. The distinguished service professor is an entrepreneur who has co-founded two companies, holds multiple U.S. patents, has worked for LinkedIn, Yahoo, and Sun Microsystems, and serves as an associate editor for the IEEE reliability society.

“I’m so proud of all of our students, but this team really stood out. They have a very solid technical and product management background. Their presentation was a big success,” said Fang.

I’m so proud of all of our students, but this team really stood out. They have a very solid technical and product management background. Their presentation was a big success.

Catherine Fang, Distinguished Service Professor, SV - III

She added that emotion detection technology is an emerging trend with many useful consumer applications that she has seen demonstrated at other big conferences.

Cai and Agarwal, who will both earn their Master of Science in Software Management (MSSM) from the Integrated Innovation Institute in December 2024, knew how to program the machine learning model to identify and measure facial expressions and eye tracking but they needed a data set with a large number of labeled images that represented a diverse set of people including those of varying color, age, and gender.

They found Hume AI, a research lab and technology company that uses artificial intelligence to measure emotional expression, which was able to provide access to their extensive data set through use of their Application Programming Interface (API). APIs are commonly used in software development to enable integration between different systems or services for applications such as accessing data from a remote server.

Jeremy Hadfield, who is a Technical Product Manager at Hume AI was a recent guest at a CMU Silicon Valley event.

Jeremy Hadfield, who is a technical product manager at Hume, was a guest at a campus event.

Cai hopes to complete his transition from a machine learning engineer to product manager in the AI field after graduation. Agarwal is planning for a career that will enable him to use AI to create virtual reality learning tools.

They both agree that the challenge and extra work to build and demonstrate their emotion detection system was a worthwhile endeavor and that it only furthered their desire to use AI to build products that will help people.

Pictured, top: Haoguang Cai demonstrates the emotion detection system that he and Khem Agarwal developed at the IEEE Rising Stars conference in January 2024.