3D displays that accommodate the human eye

Researchers at Carnegie Mellon University have engineered a new technology to enable natural accommodation cues in 3D displays.

Have you ever felt dizzy after watching a 3D movie or using a virtual reality headset? If so, it is probably because you were subconsciously able to detect the subtle differences between the virtual 3D scene presented to you and the real world.

The holy grail for 3D displays is to produce a scene that, to our eyes, is indistinguishable from reality. To achieve this, the display would need to deceive all of the perceptual cues that the human visual system deploys to sense the world. Our eyes perceive a wide gamut of colors, a large range of intensities, and numerous cues to perceive depth. Among these, arguably the hardest to deceive is the depth perception capability. As it turns out, this has immense implications for 3D TVs, movies, and virtual and augmented reality devices.

Source: Department of Electrical and Computer Engineering

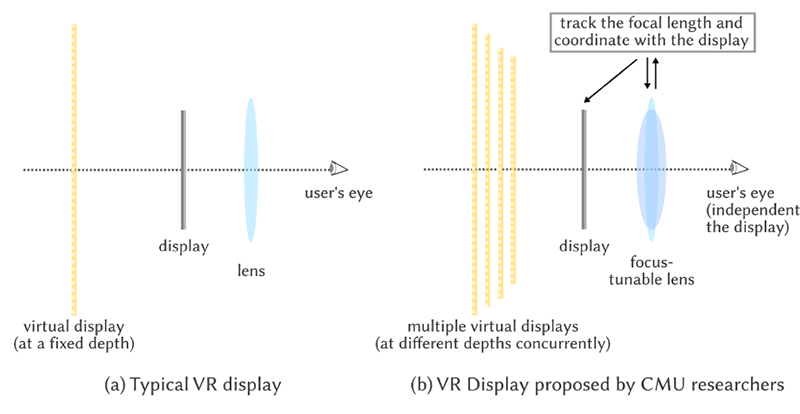

Comparison of a typical VR display to the proposed VR display. The proposed display changes the lens used in typical VR displays to one that can modify its focal length. By changing the focal length at a high frequency, tracking, and coordinating that change with a fast display, the proposed display overcomes the limitations faced by most existing displays.

Our eyes perceive depth using two dominant cues. The first cue is called vergence, where the eyes rotate to bring the same object into the center of field on both eyes; when the object is near by (say close to your nose), the eyes rotate a lot to fixate on it, and when it is far away, they rotate less. This is a strong cue for perceiving depth. The second cue is called accommodation, where the eye changes the focal length of the ocular lens to bring an object into focus. Much like a camera, an object appears sharp only when the eye is focused on it. Vergence and accommodation drive each other. When the eyes fixate on an object using vergence, the accommodation cues ensure that object comes into focus. Without this tight coupling between vergence and accommodation, we would not be able to see the world in all its sharpness and not would be able to perceive depth as well.

Aswin Sankaranarayanan and Vijayakumar Bhagavatula, professors in electrical and computer engineering, along with graduate student Rick Chang, have engineered a new technology to enable natural accommodation cues in 3D displays. The technology works by generating many virtual displays located at different depths. The depths are dispersed densely enough that we cannot differentiate it from the real world, at least in terms of depth perception. This idea of putting layers of displays at different depths is not new; however, the proposed technology can generate an order of magnitude more displays than existing methods, thereby providing unprecedented immersion.

Source: Department of Electrical and Computer Engineering

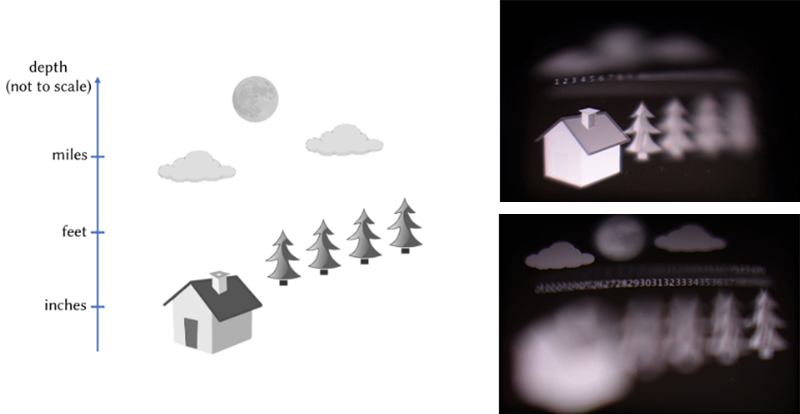

The virtual scene and photos captured by a camera changing its focus. Note that the camera is independent of the display.

One benefit of using the proposed display technology can be seen from the above image. The scene shown in the figure contains objects across a wide depth range. For example, the moon is at infinity, the clouds are miles away, the trees are yards away, and the house is inches away from us. The researchers captured the scene seen by a camera focused at different depths—mimicking the change of focus of our eye—and created a video from these images. The camera is entirely independent of the display, meaning that the display does not know where the camera is or where the eye is looking. The display generates natural focus and defocus cues; an object becomes sharp when it is focused and blurred when the focus of the camera moves away. This means that the display generates the accommodation cue.

Virtual and augmented reality devices stand to benefit significantly from this work. Today’s devices produce immersion by satisfying only the vergence cue and, for the most part, ignoring accommodation cues. This has consequences for the user experience, leading to discomfort and fatigue after long use. Non-matching accommodation cues also reduce the resolution of the display due to defocus blur. Thus the technology developed by Sankaranarayanan, Bhagavatula, and Chang is timely and could pave the way for a much more immersive AR/VR experience.

This work was published and presented at the SIGGRAPH Asia conference in December 2018 and is also described in a short video. The National Science Foundation supported the research under a Faculty Early Career Development (CAREER) Program.