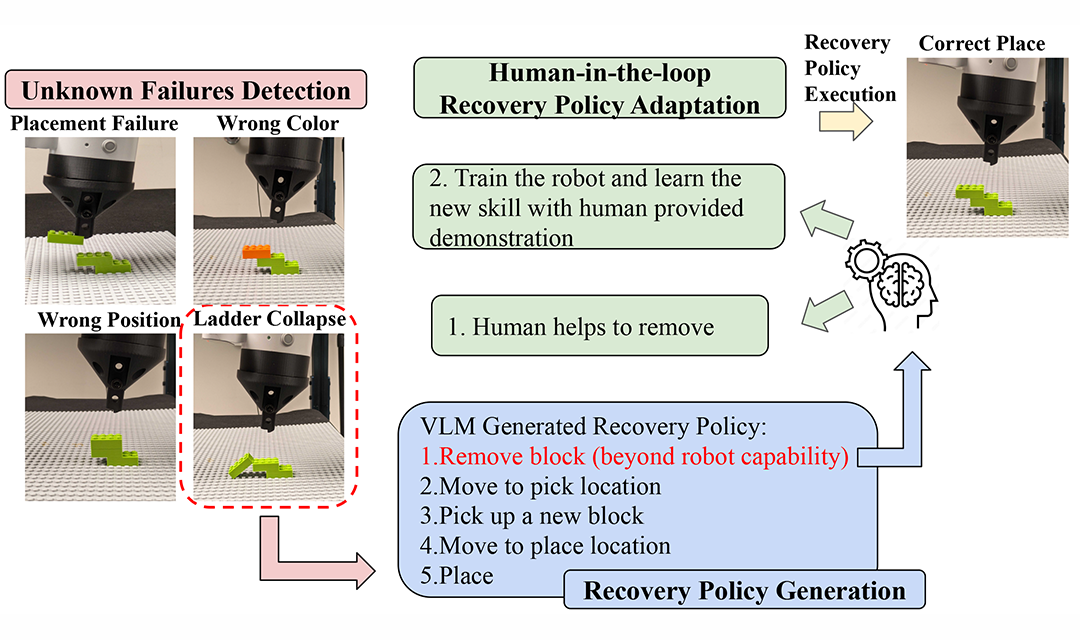

Researchers automate the failure detection and recovery for robotic systems in manufacturing environments using large language models (LLMs) and GPT4’s visual understanding functionality to detect failures from images and summarize the information in text. Two assembly testbeds are used in this project for error detection and recovery in a Quadro Toy (which contains pipes and connectors) as well as the Lego assembly testbed currently at Mill 19.

Online detection of failures or inappropriate action can benefit robots in manufacturing by allowing the robots to operate more safely and intelligently. This project combines multiple complementary sensory modalities, including semantic, visual, haptic, and kinematic sensory signals, to detect previously unknown failure modes. It will generate a software package for detection, summary and recovery of failure modes, as well as a dataset of various failure scenarios.